|

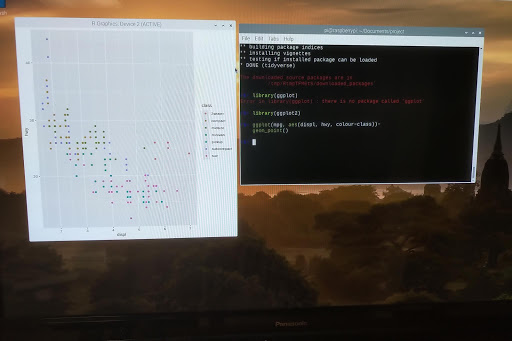

| it's "ggplot2", not "ggplot", but it is ggplot() |

Did you know that @projectJupyter's Jupyter Notebook (and JupyterLab) name came from combining 3 programming languages: JUlia, PYThon and R.

Readers of my blog do not need an introduction to Python. But what about the other 2?

Today we will talk about R. Actually, R and Python, on the Raspberry Pi.

R Origin

R traces its origins to the S statistical programming language, developed in the 1970s at Bell Labs by John M. Chambers. He is also the author of books such as Computational Methods for Data Analysis (1977) and Graphical Methods for Data Analysis (1983). R is an open source implementation of that statistical language. It is compatible with S but also has enhancements over the original.

A quick getting started guide is available here: https://support.rstudio.com/hc/en-us/sections/200271437-Getting-Started

Installing Python

As a recap, in case you don't have Python 3 and a few basic modules, the installation goes as follow (open a terminal window first):

pi@raspberrypi: $ sudo apt install python3 python3-dev build-essential

pi@raspberrypi: $ sudo pip3 install jedi pandas numpy

Installing R

Installing R is equally easy:

pi@raspberrypi: $ sudo apt install r-recommended

We also need to install a few development packages:

pi@raspberrypi: $ sudo apt install libffi-dev libcurl4-openssl-dev libxml2-dev

This will allow us to install many packages in R. Now that R is installed, we can start it:

pi@raspberrypi: $ R

Installing packages

Once inside R, we can install packages using install.packages('name') where name is the name of the package. For example, to install ggplot2 (to install tidyverse, simply replace ggplot2 with tidyverse):

> install.packages('ggplot2')

To load it:

> library(ggplot2)

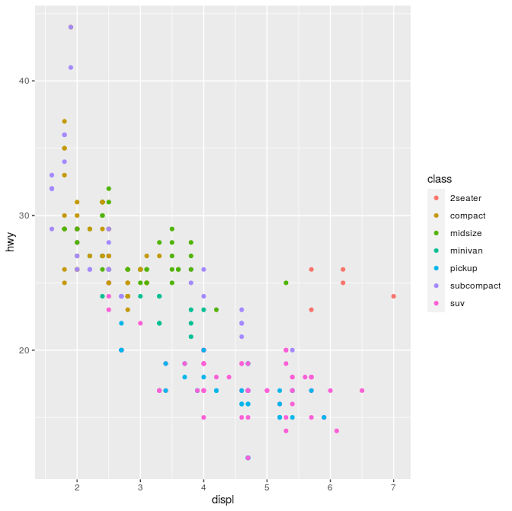

And we can now use it. We will use the mpg dataset and plot displacement vs highway miles per gallon and set the color to:

>ggplot(mpg, aes(displ, hwy, colour=class))+

geom_point()

Combining R and Python

We can go at this 2 ways, from Python call R, or from R call Python. Here, from R we will call Python.

First, we need to install reticulate (the package that interfaces with Python):

> install.packages('reticulate')

And load it:

> library(reticulate)

We can verify which python binary that reticulate is using:

> py_config()

Then we can use it to execute some python code. For example, to import the os module and use os.listdir(), from R we do ($ works a bit in a similar fashion to Python's .):

Or even enter a Python REPL:

> repl_python()

>>> import pandas as pd

>>>

Type exit to leave the Python REPL.

One more trick: Radian

we will now exit R (quit()) and install radian, a command line REPL for R that is fully aware of the reticulate and Python integration:

pi@raspberrypi: $ sudo pip3 install radian

pi@raspberrypi: $ radian

This is just like the R REPL, only better. And you can switch to python very quickly by typing ~:

r$> ~

As soon as the ~ is typed, radian enters the python mode by itself:

r$> reticulate::repl_python()

>>>

Hitting backspace at the beginning of the line switches back to the R REPL:

r$>

I'll cover more functionality in a future post.