-

Notifications

You must be signed in to change notification settings - Fork 5.1k

Description

The code quality and performance of RyuJIT is tracked internally by running MicroBenchmarks in our performance lab. We regularly triage the performance issues opened by the .NET performance team. After going through these issues for past several months, we have identified some key points.

Stability

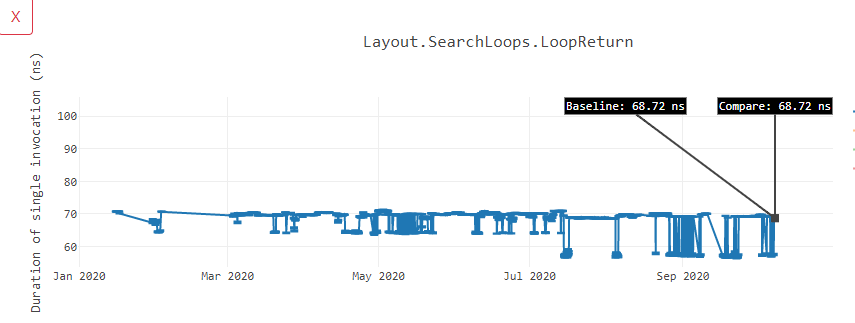

Many times, the set of commits that are flagged as introducing regression in a benchmark, do not touch the code that is tested in the benchmark. In fact, the assembly code generated for the .NET code that is being tested is often identical and yet the measurements show differences. Some of our investigation reveals that the fluctuation in the benchmark measurements happen because of the misalignment of generated JIT code in process memory. Below is an example of LoopReturn benchmark that shows such behavior.

It is very time consuming for .NET developers to do the analysis of benchmarks that regressed because of things that are out of control of .NET runtime. In the past, we have closed several issues like #13770, #39721 and #39722 because they were regressions because of code alignment. A great example that we found out while investigating those issues was the change introduced in #38586 eliminated a test instruction and should have showed improvement in the benchmarks, but introduced regression because the code (loop code inside method) now gets misaligned and the method runs slower.

Alignment issues was brought up few times in #9912 and #8108 and this issue tracks the progress towards the goal of stabilizing and possibly improving the performance of .NET apps that are heavily affected because of code alignment.

Performance lab infrastructure

Once we address the code alignment issue, the next big thing will be to identify and make required infrastructure changes in our performance lab to make sure that it can easily flag such issues without needing much interaction from .NET developers. For example, dotnet/BenchmarkDotNet#1513 proposes to make memory alignment in the benchmark run random to catch these issues early and once we address the underlying problem in .NET, we should never see bimodal behavior of those benchmarks. After that, if the performance lab does find a regression in the benchmark, we need to have robust tooling support to get possible metrics from performance runs so that a developer doing the investigation can easily identify the cause of regression. For example, identifying the time spent in various phases of .NET runtime like Jitting, Jit interface, Tier0/Tier1 JIT code, hot methods, instructions retired during benchmark execution and so forth.

Reliable benchmarks collection

Lastly, for developers working on JIT, we want to identify set of benchmarks that are stable enough and can be trusted to give us reliable measurement whenever there is a need to verify the performance for changes done to the JIT codebase. This will help us conduct performance testing ahead of time and identify potential regressions rather than waiting it to happen in performance lab.

Here are set of work items that we have identified to achieve all the above:

Code alignment work

- Make method entries of JIT code having loops aligned at 32-byte boundary for xarch. (Done in Fix condition to align methods to 32 bytes boundary #42909)

- Devise a mechanism to identify inner loops (the ones that don't have more loops inside) present in a method. (Done: Align inner loops #44370)

- Experiment with heuristics that should decide if a particular inner loop needs alignment or not. (Done: Align inner loops #44370)

- Calculate the padding that needs to be added to align the identified inner loop. (Done: Align inner loops #44370)

- Basic: Add the padding near the loop header (either at the end of previous block or at the beginning of the loop header). (Done: Align inner loops #44370)

- Before merging above work, measure the impact of loop alignment on Microbenchmarks. Tracked in Measure loop alignment's performance impact on Microbenchmarks #44051. Also in Stabilize performance measurement #43227 (comment)

- Identify benchmarks that get stabilized or whose performance is improved by the above work. (Update: See the analysis starting from Stabilize performance measurement #43227 (comment))

Future work

- Make method entries of JIT code having loops aligned at relevant boundary for arm32/arm64. See section 4.8 of https://developer.arm.com/documentation/swog309707/a.

- Combine branch tightening and loop alignment adjustments in single phase.

- Advanced: If padding proves to be costly, have a way to spread the padding throughout the method so the loop header gets aligned. Account the padding while doing branch tensioning.

- Add padding at the blind spot like after

jmporretinstruction that comes beforealigninstruction. - Add padding at the end of blocks that has lower weight than the block that precedes the loop header block.

- Explore option to have a method misaligned such that we can skip the padding needed for loops and they get auto-aligned or aligned with minimal padding.

- Add padding at the blind spot like after

- Perform loop alignment similar to Align inner loops #44370 for R2R code.

- For x86, we should improve the encoding we use for multiple size

NOPinstructions. Today, we just output repeated single byte90, but could do better like we do for x64. - Explore option to align enclosing loops if it can borrow some of the padding needed for inner loop. E.g. in a nested loop, if there is an inner loop that needs padding of 10 bytes and the outer loop can be aligned by adding padding of 4 bytes, then add padding of 4 bytes to outer loop and 6 bytes to inner loop. That way, both the loops are aligned.

Performance tooling work

- Add random memory alignment. Tracked by Make memory alignment more random BenchmarkDotNet#1513 and Refactor initialization logic to allow for enabling Memory Randomization performance#1587

- Add various tooling in PerfView, BenchmarkDotNet analyzers to add the required metric. (WIP)

- Perform superpmi collection of microbenchmarks so codegen team can easily test the impact of their change on generated code of various benchmarks. (Superpmi on Microbenchmarks #47900)

- Add a new event to track memory allocated for JIT code that can be surfaced in PerfView through JITStats. PR: New event to track memory allocation for JIT code #44030 and Display JIT Allocated Heap Size microsoft/perfview#1289

Metadata

Metadata

Assignees

Labels

Type

Projects

Status