AWS Machine Learning Blog

Category: Generative AI

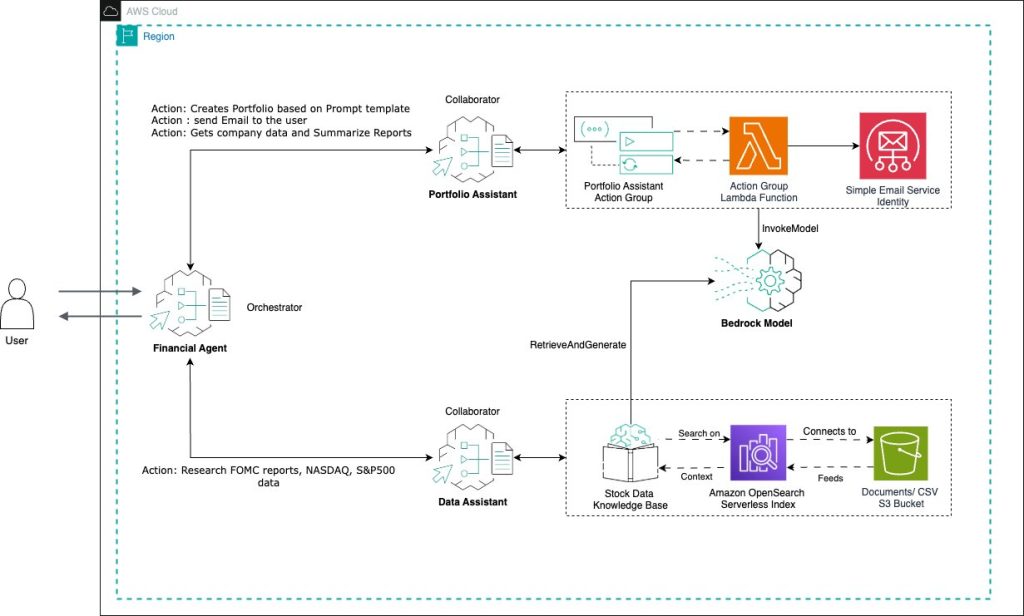

Build a gen AI–powered financial assistant with Amazon Bedrock multi-agent collaboration

This post explores a financial assistant system that specializes in three key tasks: portfolio creation, company research, and communication. This post aims to illustrate the use of multiple specialized agents within the Amazon Bedrock multi-agent collaboration capability, with particular emphasis on their application in financial analysis.

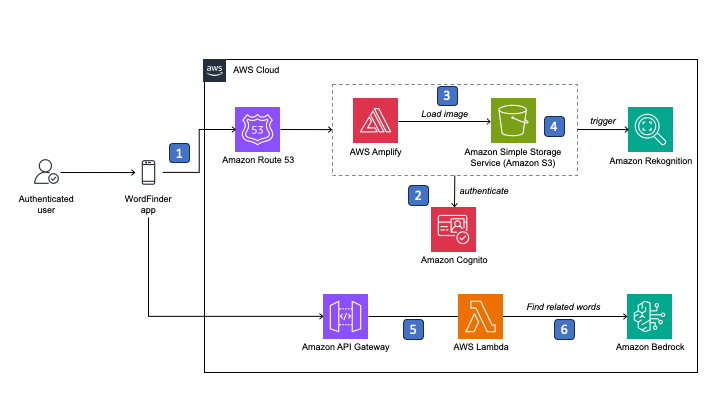

WordFinder app: Harnessing generative AI on AWS for aphasia communication

In this post, we showcase how Dr. Kori Ramajoo, Dr. Sonia Brownsett, Prof. David Copland, from QARC, and Scott Harding, a person living with aphasia, used AWS services to develop WordFinder, a mobile, cloud-based solution that helps individuals with aphasia increase their independence through the use of AWS generative AI technology.

Best practices for Meta Llama 3.2 multimodal fine-tuning on Amazon Bedrock

In this post, we share comprehensive best practices and scientific insights for fine-tuning Meta Llama 3.2 multimodal models on Amazon Bedrock. By following these guidelines, you can fine-tune smaller, more cost-effective models to achieve performance that rivals or even surpasses much larger models—potentially reducing both inference costs and latency, while maintaining high accuracy for your specific use case.

Extend large language models powered by Amazon SageMaker AI using Model Context Protocol

The MCP proposed by Anthropic offers a standardized way of connecting FMs to data sources, and now you can use this capability with SageMaker AI. In this post, we presented an example of combining the power of SageMaker AI and MCP to build an application that offers a new perspective on loan underwriting through specialized roles and automated workflows.

Amazon Bedrock Model Distillation: Boost function calling accuracy while reducing cost and latency

In this post, we highlight the advanced data augmentation techniques and performance improvements in Amazon Bedrock Model Distillation with Meta’s Llama model family. This technique transfers knowledge from larger, more capable foundation models (FMs) that act as teachers to smaller, more efficient models (students), creating specialized models that excel at specific tasks.

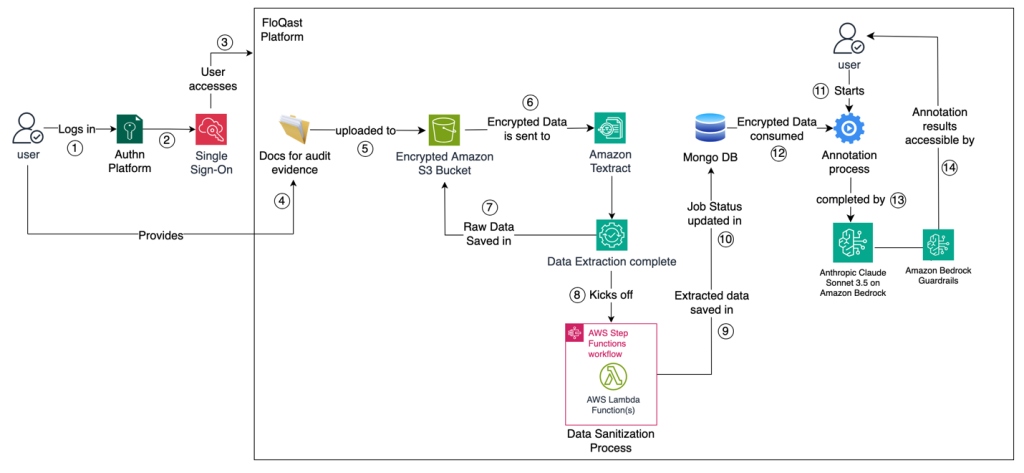

FloQast builds an AI-powered accounting transformation solution with Anthropic’s Claude 3 on Amazon Bedrock

In this post, we share how FloQast built an AI-powered accounting transaction solution using Anthropic’s Claude 3 on Amazon Bedrock.

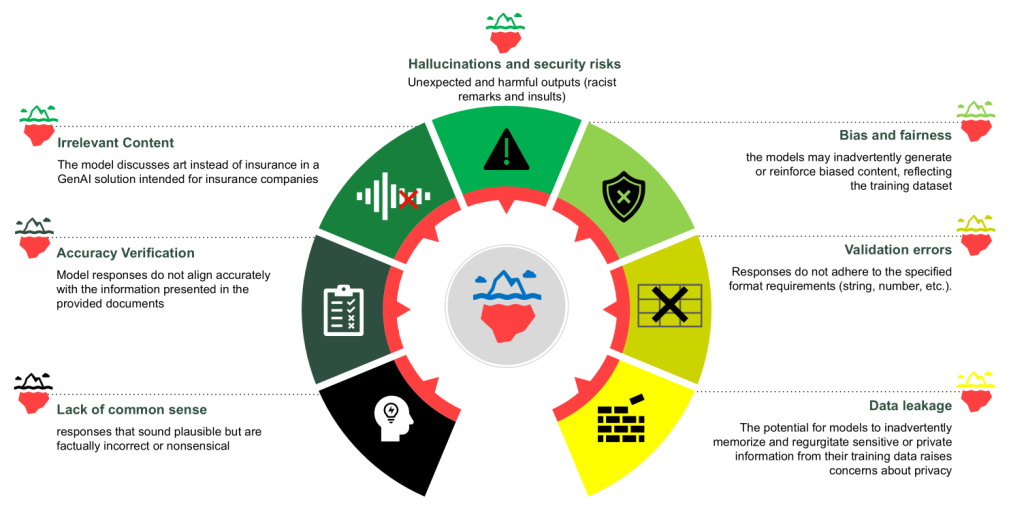

Insights in implementing production-ready solutions with generative AI

As generative AI revolutionizes industries, organizations are eager to harness its potential. However, the journey from production-ready solutions to full-scale implementation can present distinct operational and technical considerations. This post explores key insights and lessons learned from AWS customers in Europe, Middle East, and Africa (EMEA) who have successfully navigated this transition, providing a roadmap for others looking to follow suit.

Responsible AI in action: How Data Reply red teaming supports generative AI safety on AWS

In this post, we explore how AWS services can be seamlessly integrated with open source tools to help establish a robust red teaming mechanism within your organization. Specifically, we discuss Data Reply’s red teaming solution, a comprehensive blueprint to enhance AI safety and responsible AI practices.

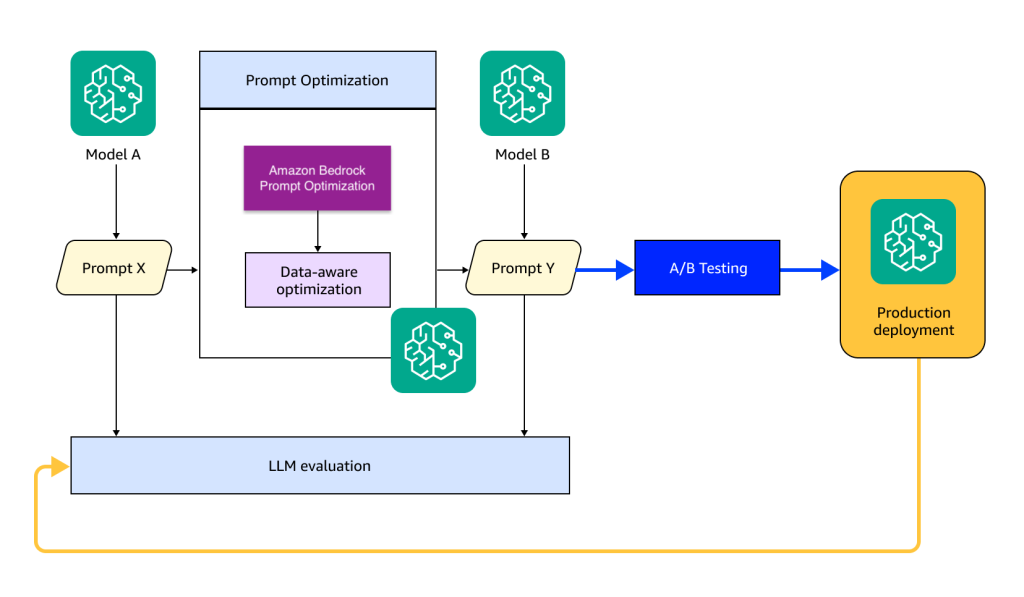

Improve Amazon Nova migration performance with data-aware prompt optimization

In this post, we present an LLM migration paradigm and architecture, including a continuous process of model evaluation, prompt generation using Amazon Bedrock, and data-aware optimization. The solution evaluates the model performance before migration and iteratively optimizes the Amazon Nova model prompts using user-provided dataset and objective metrics.

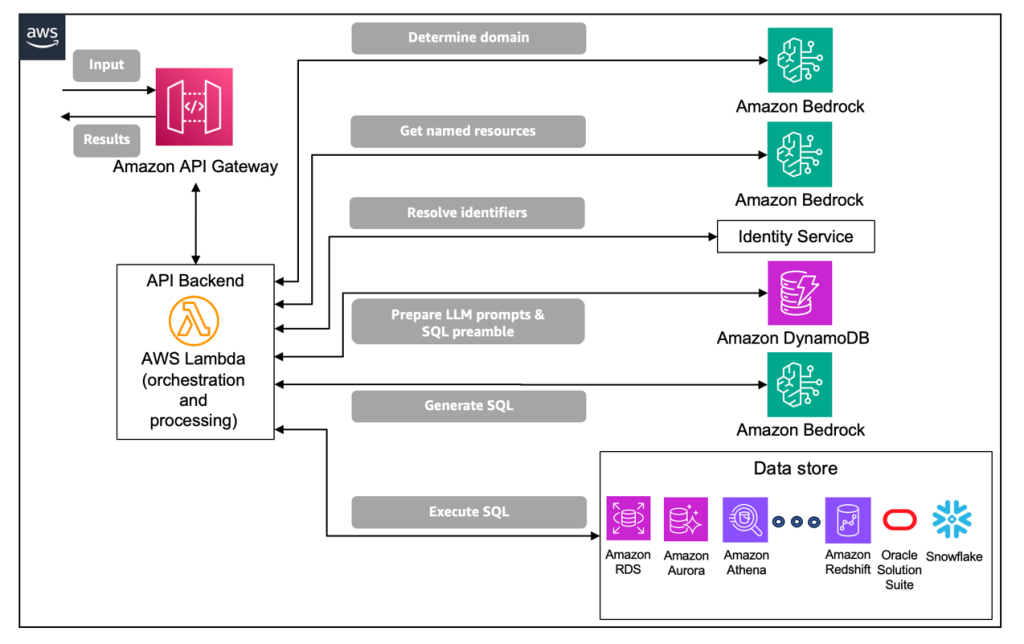

Enterprise-grade natural language to SQL generation using LLMs: Balancing accuracy, latency, and scale

In this post, the AWS and Cisco teams unveil a new methodical approach that addresses the challenges of enterprise-grade SQL generation. The teams were able to reduce the complexity of the NL2SQL process while delivering higher accuracy and better overall performance.